Large Scale DRL Robot Exploration Framework

Large Scale DRL Robot Exploration Framework

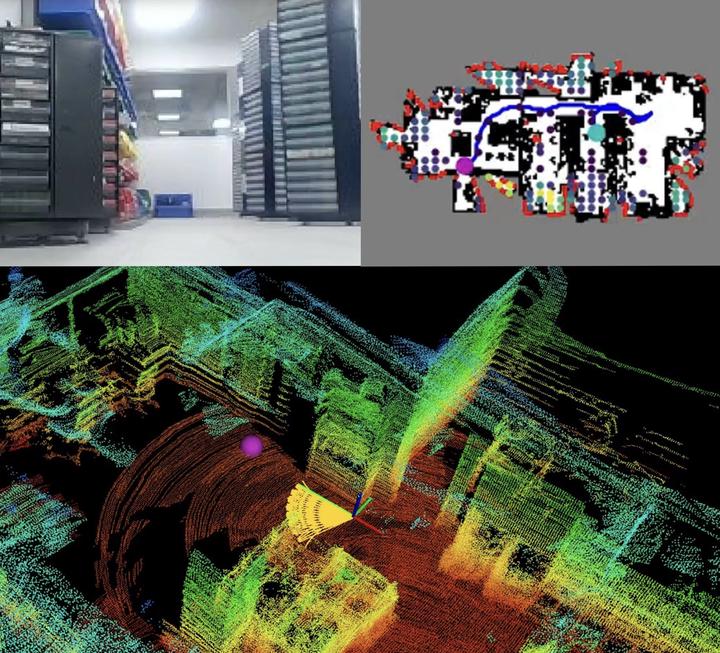

This project proposes a deep reinforcement learning (DRL) based reactive planner to solve large-scale Lidar-based autonomous robot exploration problems in 2D action space. The DRL-based planner enables agents to reactively plan exploration paths by making implicit predictions about unknown areas, based on learned estimations of the underlying transition model of the environment.

Overview

This work presents a deep reinforcement learning-based reactive planner for large-scale autonomous robot exploration using Lidar sensors. The approach relies on learned attention mechanisms to capture long-term dependencies at different spatial scales, enabling robots to reason about their entire belief over known areas and make intelligent exploration decisions.

The system utilizes ground truth information (privileged learning) to guide environment estimation during training, combined with a graph rarefaction algorithm that allows models trained in small-scale environments to successfully scale to large-scale scenarios.

Key Innovation: Alpha Conditioning System

The enhanced system introduces alpha conditioning (α ∈ [0,1]) for dynamic exploration-exploitation control:

- α = 0.0: Pure exploitation (efficient, direct paths)

- α = 0.5: Balanced exploration-exploitation

- α = 1.0: Pure exploration (frontier-seeking behavior)

Core Technical Components

1. Attention-Based Architecture

- Graph Attention Networks: Process variable-size spatial graphs (1-360 nodes)

- Multi-Head Attention: Captures long-term dependencies across different spatial scales

- Encoder-Decoder Structure: 6-layer transformer encoder + 1-layer decoder

- Dynamic Graph Processing: QuadTree-based spatial indexing for efficient node management

2. Alpha Conditioning Integration

The α parameter provides continuous behavioral control through:

- Policy Network: Alpha embedding added to current state features

- Critic Network: Alpha concatenated to action features

- Adaptive Entropy: Entropy targets vary with α values

- Reward Shaping:

r(α) = a·r_coverage + α·r_exploration

3. Privileged Learning Framework

- Ground Truth Critic: Complete environment information for stable training

- Environment Estimation: Learned transition model predictions

- Graph Rarefaction: Enables scaling from small to large environments

- Distributed Training: 16 parallel workers with Ray

4. Lidar-Based Perception

- 360° LiDAR Simulation: Ray-casting sensor model

- Multi-Resolution Mapping: 0.4m cells, 4.0m nodes, 16m sensor range

- Frontier Detection: Boundary-based exploration target identification

- Dynamic Environment Representation: Real-time map updating

Technical Approach

Deep Reinforcement Learning Framework

- Soft Actor-Critic (SAC): With adaptive entropy targeting based on α

- Distributed Training: 16 parallel workers using Ray framework

- Experience Replay: 28-dimensional transitions including α values

- Per-Experience Alpha Loss: Individual entropy targets for fine-grained control

Spatial Reasoning and Graph Processing

- Variable-Size Graph Networks: Handle 1-360 nodes dynamically

- Attention Mechanisms: Capture long-term spatial dependencies

- QuadTree Data Structure: Efficient spatial indexing and node management

- Graph Rarefaction Algorithm: Enable scaling from small to large environments

Learning and Adaptation

- Privileged Learning: Ground truth information guides training

- Alpha-Conditioned Rewards: Dynamic reward shaping based on exploration parameter

- Implicit Environment Modeling: Learn transition models for unknown area prediction

- Multi-Scale Spatial Reasoning: Process information at different spatial resolutions

Methodology

Environment Simulation

- 2D Action Space: Continuous control for autonomous navigation

- Lidar-Based Perception: 360° sensor simulation with 16m range

- Multi-Resolution Representation: 0.4m cell resolution, 4.0m node resolution

- Dynamic Map Updates: Real-time environment state tracking

Training Pipeline

- Distributed SAC: Soft Actor-Critic with 16 parallel workers

- Alpha Sampling: Random α values from [0,1] during training

- Ground Truth Supervision: Privileged learning with complete environment information

- Experience Buffer: 28-dimensional state-action-reward-alpha transitions

Evaluation Framework

- Benchmark Environment: 130m × 100m large-scale scenarios

- Hardware Validation: Real robot deployment and testing

- Comparative Analysis: Against state-of-the-art exploration planners

- Scalability Testing: From small-scale training to large-scale deployment

Performance Results

The proposed approach demonstrates significant improvements over state-of-the-art planners in a 130m × 100m benchmark scenario:

- 12% improvement in path length efficiency

- 6% improvement in makespan (task completion time)

- 60% reduction in planning time

- Successful hardware validation on real robot systems

Key Metrics

- Exploration Coverage: Percentage of environment explored

- Path Efficiency: Total distance traveled vs. optimal path

- Planning Speed: Real-time decision making capability

- Scalability: Performance maintenance across environment sizes

Applications

- Autonomous Robot Exploration: Large-scale environment mapping and navigation

- Search and Rescue Operations: Unknown environment exploration with time constraints

- Environmental Monitoring: Systematic coverage of large outdoor areas

- Space Exploration: Autonomous rovers for planetary surface exploration

- Industrial Inspection: Warehouse and facility autonomous surveying

- Agricultural Monitoring: Field mapping and crop surveillance

Technologies and Frameworks

Core Technologies

- PyTorch: Deep learning framework for neural network implementation

- Ray: Distributed computing framework for parallel training

- Soft Actor-Critic (SAC): Advanced reinforcement learning algorithm

- Graph Neural Networks: Attention-based spatial reasoning

- LiDAR Simulation: Ray-casting sensor models

System Components

- Distributed Training Infrastructure: Multi-worker parallel learning

- Graph Processing Pipeline: QuadTree spatial data structures

- Real-time Visualization: Training progress and behavior monitoring

- Hardware Integration: Real robot deployment capabilities

- Simulation Environment: Custom 2D exploration scenarios

Technical Challenges Addressed

Scalability and Performance

- Large-Scale Environment Navigation: Handling 130m × 100m exploration scenarios

- Real-time Decision Making: 60% faster planning compared to existing methods

- Memory Efficiency: Variable-size graph processing (1-360 nodes)

- Computational Optimization: Distributed training across 16 parallel workers

Learning and Adaptation

- Exploration-Exploitation Balance: Alpha conditioning for behavioral control

- Environment Generalization: Graph rarefaction for scale transfer

- Partial Observability: Implicit prediction of unknown areas

- Attention Mechanism Design: Long-term spatial dependency modeling

System Integration

- Hardware Deployment: Successful real robot validation

- Sensor Integration: LiDAR-based perception and mapping

- Multi-Resolution Processing: 0.4m to 4.0m spatial scales

- Dynamic Graph Management: Efficient spatial data structures

Research Contributions

Novel Methodological Advances

- Alpha Conditioning System: Fine-grained exploration-exploitation control

- Attention-Based Spatial Reasoning: Multi-scale environment understanding

- Privileged Learning Framework: Ground truth guided training for stability

- Graph Rarefaction Algorithm: Scalable model transfer methodology

Performance Achievements

- 12% path length improvement over state-of-the-art methods

- 6% makespan reduction in exploration tasks

- 60% planning time decrease for real-time applications

- Hardware validation demonstrating real-world applicability

Technical Innovation

- Variable-Size Graph Networks: Handling dynamic spatial representations

- Distributed SAC Implementation: Scalable reinforcement learning architecture

- Multi-Resolution Environment Modeling: Efficient spatial processing

- Real-time Frontier Detection: Dynamic exploration target identification